How Solana Solved Downtime: A Deep Dive Into Network Fixes

How Solana Handles Peak Demand (And Why Past Outages Won't Happen Again)

"Solana goes down all the time."

If you've spent any time in crypto Twitter debates, you've heard this line. And for a while, it was true.

Then came January 2025. The TRUMP memecoin frenzy pushed Solana’s DEXs to ~$38 billion in daily volume on January 18, roughly 10% of Nasdaq’s daily volume. Around the same time, Pump.fun hit an all-time high of 71,739 tokens launched on January 23, and the Jupiter airdrop distributed $616 million to 2 million wallets.

Solana stayed up through all of it.

The last outage was in February 2024, caused by an obscure bug in legacy code, not extreme load. Since then? Nearly 22 months of 100% uptime while processing unprecedented transaction volumes that would have crashed the earlier version of this network.

Let's talk about what actually happened during these stress tests, what changed in Solana's architecture, and why those old failure modes won't happen again.

The Stress tests that didn't break Solana

Case Study 1: Pump.fun Peak Activity (2024-2025)

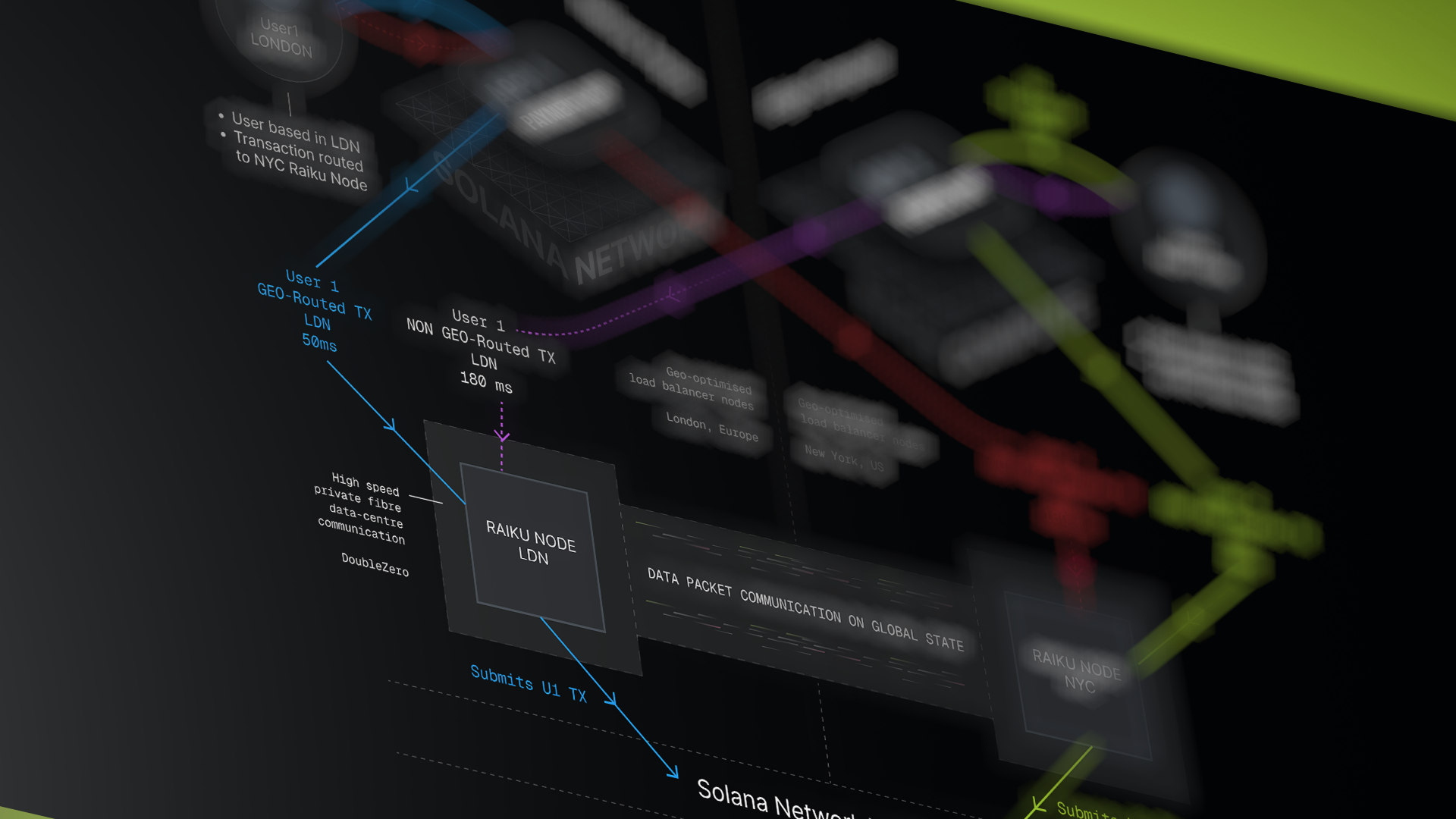

Pump.fun launched in January 2024 as Solana's permissionless token launchpad, letting anyone create memecoins in seconds using a bonding-curve model. Tokens trade inside Pump.fun until they hit a market-cap threshold, then "graduate" to public DEXs like Raydium or Meteora.

PumpSwap is the second half of the system. Once a token leaves its bonding curve, it moves to PumpSwap, Pump.fun’s dedicated DEX, which becomes the exclusive venue for all “post-curve” trading.

The Scale:

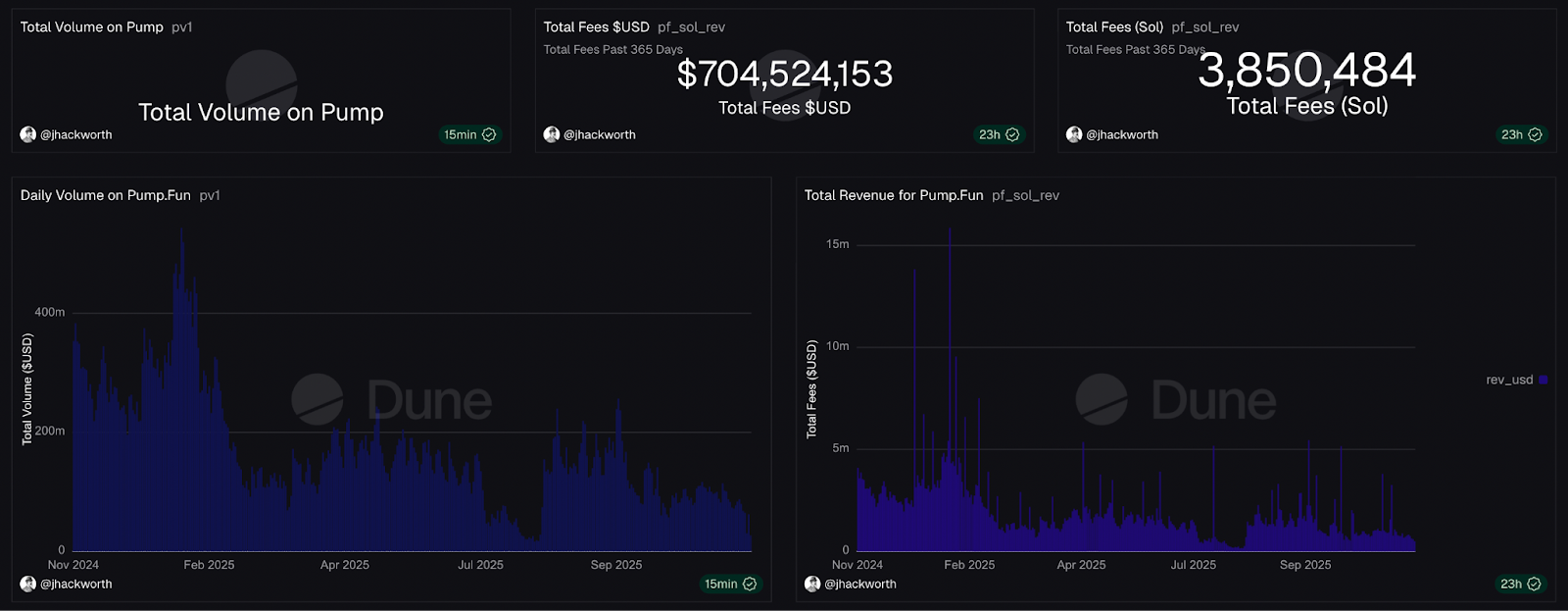

By Q4 2024, Pump.fun became the #1 revenue-generating app on Solana, pulling in $235 million in quarterly revenue, a 242% increase from the previous quarter.

Q1 2025 saw the platform hit peak activity. On January 23, 2025, Pump.fun launched 71,739 tokens in a single day, an all-time record. By March, Pump.fun + PumpSwap were doing $279 million in average daily volume.

In Q2 2025, PumpSwap became the exclusive venue for graduated tokens, causing Pump.fun's volume to surge 124% to $544 million. By Q3 2025, over 3 million tokens were created on Solana, with Pump.fun remaining the dominant launchpad.

Across 2024-2025, Pump.fun became Solana's memecoin engine, consistently the #1 revenue app and the primary driver of speculative activity on the network.

Network Performance

By early 2025, Solana was processing unprecedented load without breaking. It was the result of systematic engineering improvements throughout 2024.

Understanding the March-April 2024 Challenge

During the spring 2024 memecoin surge, headlines screamed about Solana's "75-80% transaction failure rate." This sounds alarming, but here's what was actually happening:

To understand why, you need to know what "failed" actually means on Solana. Three outcomes exist when you submit a transaction:

1. Success - Transaction executes as expected

2. Failed - Transaction reaches the blockchain, gets processed, but the smart contract rejects it (you still pay gas)

3. Dropped - Transaction never reaches the block leader at all (no trace onchain)

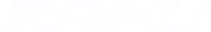

The "failure rate" everyone panicked about was #2, not a network problem.About 95% of "failed" transactions were bots spamming arbitrage attempts. Here's why:

Arbitrage bots send hundreds or thousands of transaction attempts trying to capture tiny price differences between exchanges. They spam relentlessly because the economics make sense: spending a few hundred dollars in fees to land one $100K arbitrage is worth it.

When these bot transactions "fail," it doesn't mean Solana crashed. It means:

- The blockchain successfully processed the transaction

- But by the time it executed, market conditions had changed

- The smart contract rejected it: slippage exceeded, arbitrage gone, or another bot won

The Real Problem: Dropped Transactions

The actual issue causing bad user experience was #3: dropped transactions, legitimate transactions that never reached block leaders at all.

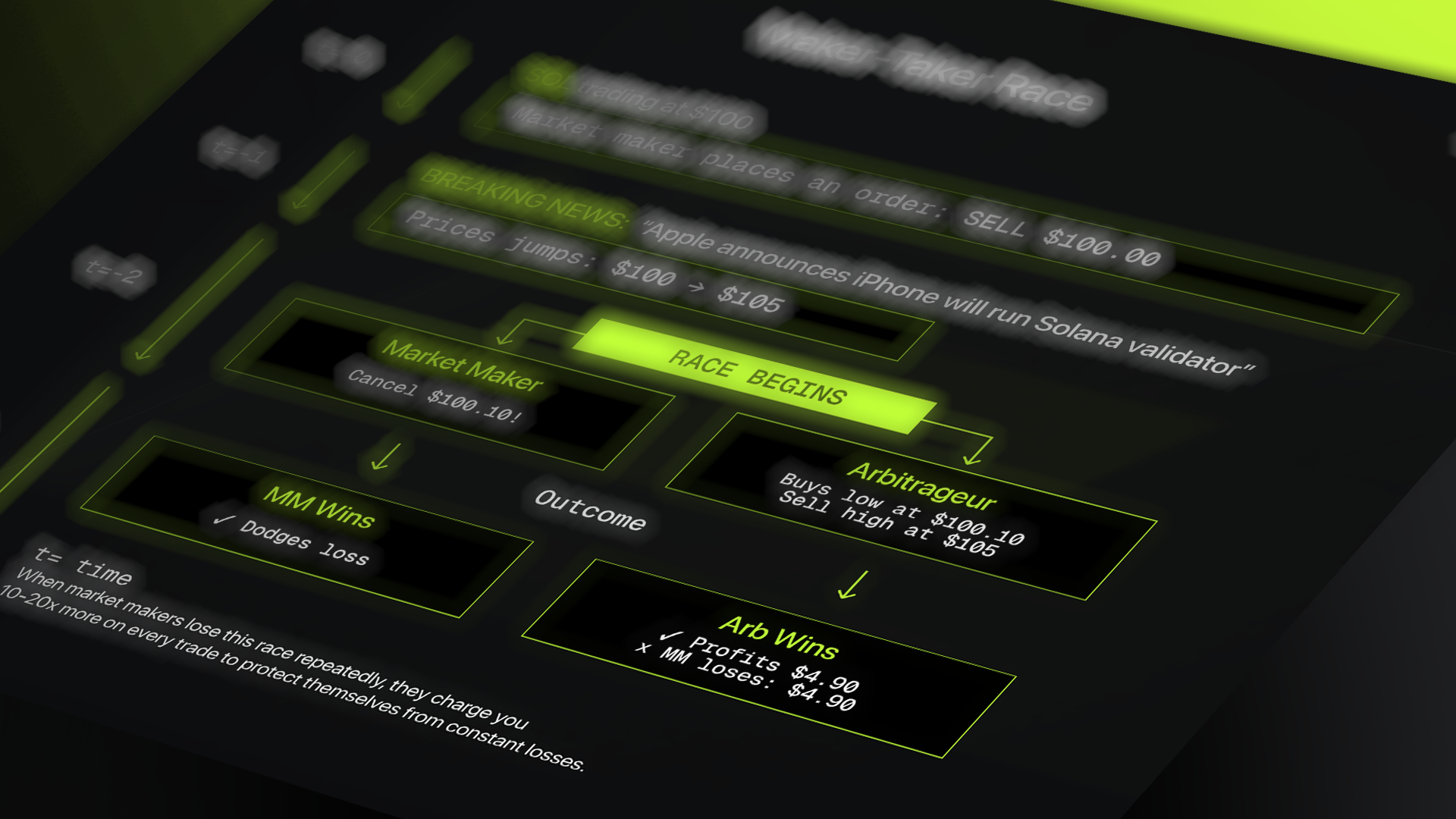

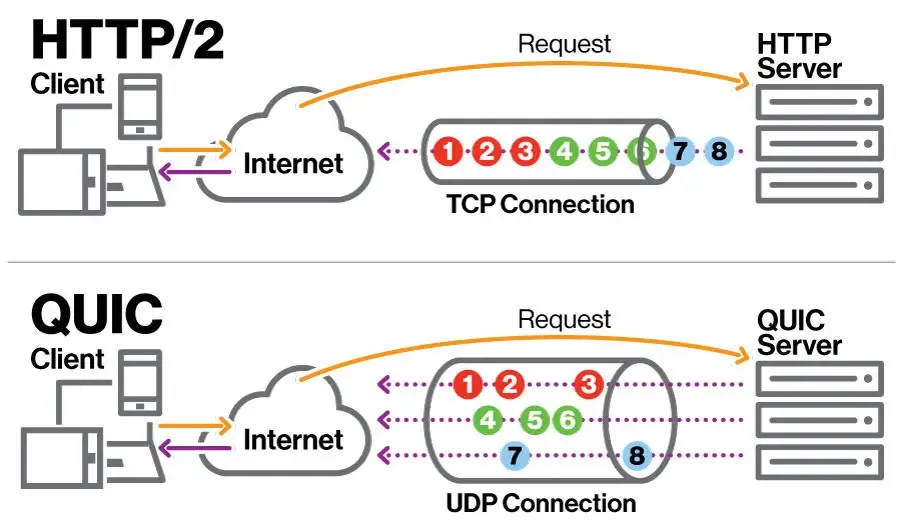

This was a networking layer problem. During peak activity, block leaders were receiving 10-100x more connection requests than capacity. Solana's QUIC protocol could identify and rate-limit connections, but the logic for which connections to drop was poorly implemented and buggy.

Instead of dropping connections based on criteria like stake weight or fee priority, connections were being dropped randomly. To get through, you had to spam more connection requests than everyone else. Since bots were flooding the network with connection attempts, regular users couldn't establish connections and their transactions got dropped.

Developers deployed fixes in April 2024 (version 1.17.31) to improve the networking layer.

- Stake-weighted prioritization: Solana upgraded its Quality-of-Service (QoS) rules so block leaders clearly prioritize connections from reputable validators and reliable RPC providers. This prevents low-staked or untrusted nodes from overwhelming leaders with spammy connection requests.

- More efficient packet handling: The QUIC implementation was optimized using smallvec to aggregate packet chunks more efficiently. This reduced memory overhead per packet, cut unnecessary allocation work, and helped leaders process far more packets per second during peak demand.

- Smarter spam filtering: Connections from very low-staked nodes are now treated as “unstaked” in the QoS system. This deprioritizes high-volume bot nodes, ensuring bandwidth is reserved for legitimate users, wallets, and applications even during extreme traffic spikes.

- Improved forwarding logic: BankingStage forwarding filters were tightened so only valid, well-formed transactions move deeper into the pipeline. This prevents block leaders from wasting resources on junk traffic and speeds up the path for real transactions to reach block production.

The Results: Nine months after these upgrades, Solana processed the heaviest memecoin activity in its history, no outages, no consensus failures, blocks continued producing normally. The network went from struggling with connection-layer bottlenecks in March 2024 to handling unprecedented load by January 2025.

Case Study 2: The TRUMP and Melania token launch (2025)

The $TRUMP token launched on January 17, 2025, followed by $MELANIA on January 19, triggering the largest trading surge in Solana’s history. Over that weekend, Solana processed tens of billions in DEX volume and record user activity, its biggest real-world stress test to date.

The Scale

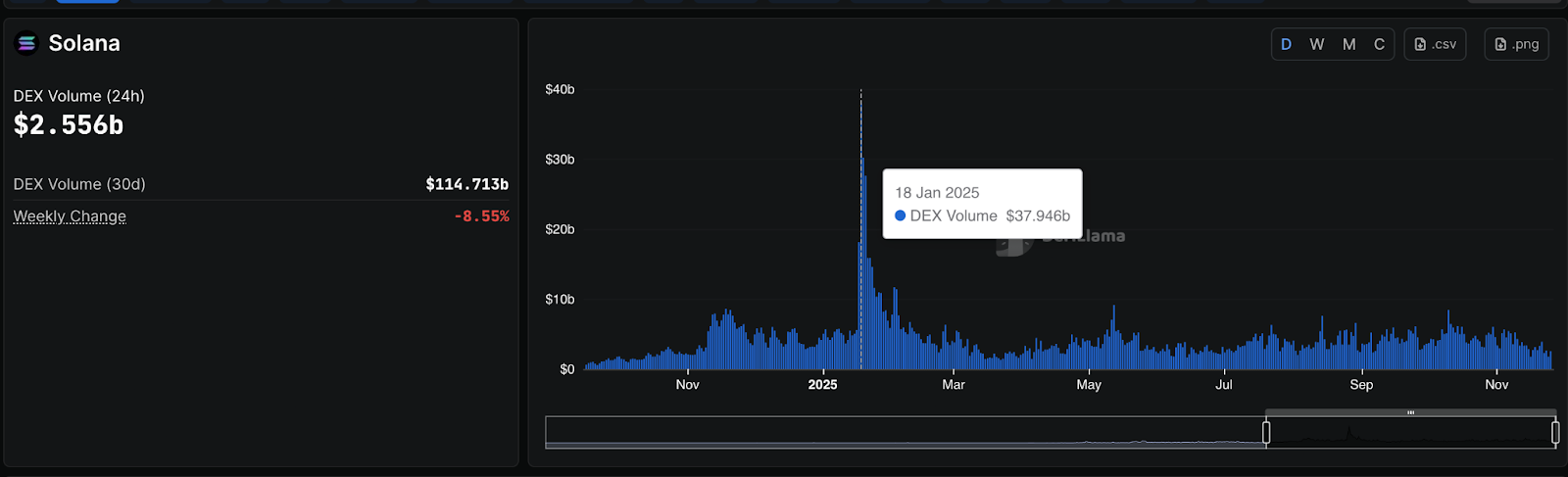

On January 18–19, 2025, Solana processed the largest single-day DEX volume in the history of crypto: ~$38 billion. To put this in perspective, Ethereum's previous single-day record was approximately $6 billion; Solana processed 6.5x more.

In the same 48-hour window, daily active addresses jumped from 1.9 million to 2.7 million, and the network generated $56.9 million in Real Economic Value, an all-time high. Over 10% of Solana's all-time cumulative DEX volume occurred in the seven days surrounding the TRUMP token launch.

The $TRUMP token, launched on January 17, 2025, reignited the memecoin cycle and pulled unprecedented liquidity onto Solana.

The MELANIA token launched in the same January window and, alongside TRUMP and LIBRA, was one of the primary catalysts behind Meteora’s explosive 3047% QoQ increase in trading volume, making it the #3 DEX for the quarter.

Network Performance

The TRUMP and MELANIA launches represented Solana's most intense stress test to date. The network processed nearly $40 billion in a single day, more volume than most blockchains process in months.

The Confusion: Did Solana Go Down?

- What people saw: Transaction delays, failed swaps, wallet errors, exchange withdrawal issues lasting up to 21 hours.

- What people thought: "Solana went down again."

- What actually happened: The Solana blockchain never went down. Not for a single second.

Solana's blockchain maintained 100% uptime throughout the whole event. Block production remained active continuously: zero consensus failures, zero network halts. Coinbase explicitly clarified that delays were related to their own infrastructure, not the Solana network.

What struggled was the infrastructure layer, the applications and services built on top of Solana:

- Jito's Block Engine API experienced severe degradation for over three hours due to "unprecedented load levels." While blocks continued processing, transaction submission through Jito's service was impacted.

- Phantom wallet reported handling over 8 million requests per minute, exceeding its capacity and causing transaction delays. The wallet processed 10 million transactions and $1.25 billion in volume over 24 hours.

- Jupiter's price API went down during peak load due to over 7,000 requests per second, causing apps that relied on it to fail to set proper priority fees.

- Coinbase faced delays of up to 21 hours for Solana transactions, not because of the blockchain, but because their infrastructure couldn't keep up with processing the volume.

The key distinction: This wasn't a network problem; it was an infrastructure scaling challenge.

Max Resnick, a former Ethereum researcher who joined Solana core development firm Anza in December 2024, said: "Make no mistake, the Solana of the ore era (march 2024) could not have handled this launch gracefully. The degree to which Solana knocked it out of the park on a technical level last night is astonishing. So much progress in just 9 months and we are just getting started."

The network that would have crashed under this load in March 2024 processed it flawlessly nine months later. But the TRUMP launch wasn't the only major stress test Solana survived that month.

Case Study 3: October 10 Market Crash (2025)

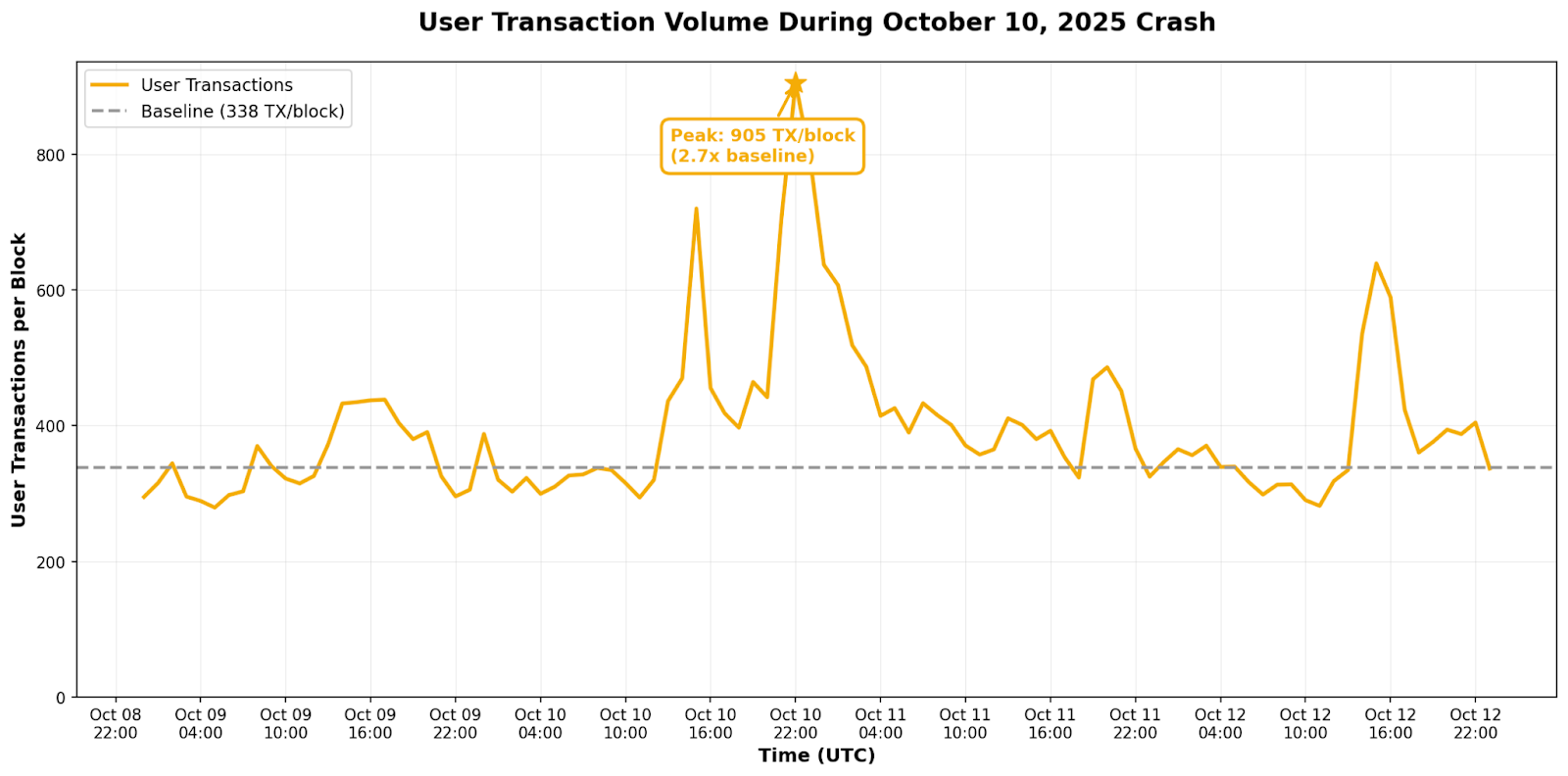

On October 10, 2025, President Trump announced a sweeping 100% tariff on Chinese imports at 20:50 UTC, triggering the largest single-day liquidation event in crypto history. Over the next 29 hours, $19 billion in leveraged positions were liquidated industry-wide, affecting 1.6 million traders across all cryptocurrencies.

The Impact on Solana:

SOL crashed from $229 to $173 over 29 hours, a 24.1% drop, as billions in SOL-specific liquidations cascaded through the network. Priority fees spiked 32x baseline, peaking at 0.443 SOL per block as traders paid any price to exit positions. User transactions surged 2.7x, peaking at 905 transactions per block.

How the Network Performed:

Despite the most violent liquidation event in crypto history, Solana maintained exceptional stability:

Skip rate: 0.05% during peak crash (vs 0.47% baseline), only 68 skipped slots out of 150,000. Validators were more diligent because blocks were so lucrative.

Slot duration: 395ms (vs 392ms baseline), only a 3ms increase despite 32x fee spike and 2.7x transaction surge.

Zero consensus failures, zero network halts. Blocks continued producing normally throughout the entire liquidation cascade.

The Result: This was a forced liquidation cascade driven by macro economic news, fundamentally different from January's memecoin speculation. Within 24 hours, priority fees normalized. Within 48 hours, SOL recovered 18% from its low. The network that survived euphoric speculation also survived the biggest panic sell-off in crypto history, all within the same year.

What actually caused past outages

Between 2020 and 2024, Solana suffered seven major outages. Here is what broke, what fixed it, and why those failures are permanently resolved.

Problem 1: Transaction Spam Overwhelmed the Network

Solana’s early outages were caused by raw packet floods that the original networking layer could not filter or prioritize.

1. September 2021 – Grape Protocol IDO: Bots flooded Solana with 300,000 transactions per second. One bot write-locked 18 critical accounts, forcing sequential processing. Validators forwarded excess transactions to the next leader, amplifying congestion. RPC nodes endlessly retried failed transactions. Vote transactions got drowned out, stalling consensus. Validators ran out of memory and crashed.

2. April 2022 – Candy Machine NFT Mint: Bots racing for first-come, first-served NFT mints generated 6 million requests per second, over 100 Gbps per node. This was 10,000% more traffic than the September 2021 outage. Validators ran out of memory and crashed. Insufficient voting throughput prevented block finalization. Abandoned forks accumulated faster than they could be cleaned up, overwhelming validators even after restarts. Manual intervention was required to restore the network.

Why the bots won:

Solana had no spam defense. It processed everything first-come, first-served with no way to:

- Filter duplicate transactions

- Rate-limit attackers

- Block abusive sources

- Let legitimate users prioritize their transactions

The economics favored attackers: spending a few hundred dollars in fees could win a $100K+ NFT mint or arbitrage opportunity. Whoever sent the most packets won. Bots just needed volume, not value.

This lack of defense led to two major outages we discussed above: 17 hours in September 2021 and 8 hours in April 2022.

Solution 1: QUIC Protocol (Released November 2022, v1.13.4)

Solana replaced its old UDP networking layer with QUIC. UDP accepted all packets anonymously and offered no mechanism to trace or slow abusive traffic. QUIC introduces identifiable connections between senders and validators. Each connection can be recognized, rate-limited, or blocked based on behavior.

Attackers now need to establish thousands of separate QUIC connections to spam effectively. Each connection is throttled individually, and validators can block suspicious patterns at the network layer before any transaction reaches the runtime.

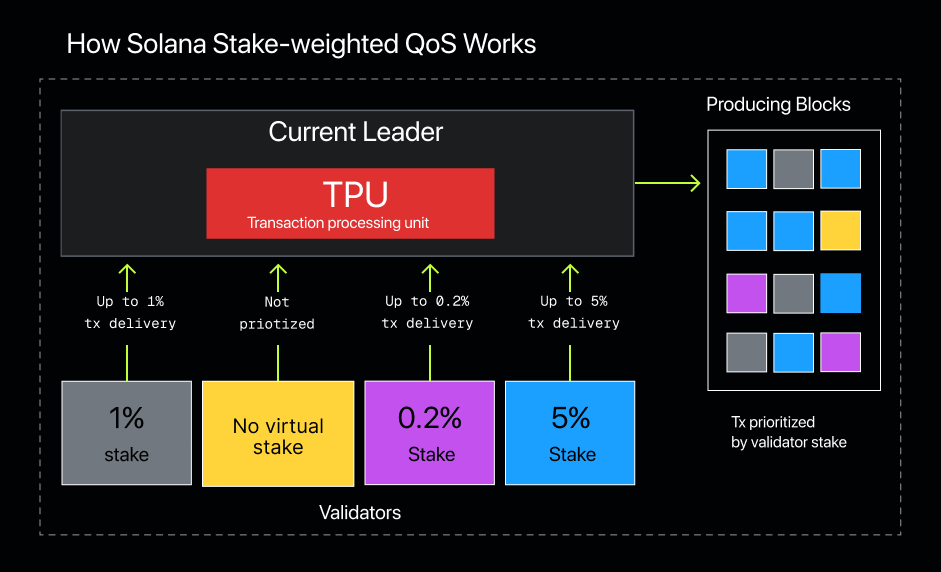

Solution 2: Stake-Weighted Quality of Service (Released 2023)

Stake-Weighted QoS limits how many packets a validator can send to the leader based on stake. A validator with 1% of total stake can send 1% of packets. A validator with 5% can send 5%.

To flood the network the way bots did in 2021 and 2022, an attacker would need control of a massive share of staked SOL. With roughly 400 million SOL staked, sending even 30 percent of packets would require about 120 million SOL, roughly $24 billion at $200 per SOL. Even with that investment, they would still be capped at 30% of packets, and the network would continue operating for everyone else.

Solution 3: Priority Fees (Released 2023)

Priority fees let users pay a small fee to have their transactions executed before lower-priority ones. Leaders sort transactions by fee per compute unit and process the highest-fee ones first. Bots can no longer dominate through raw volume. To move ahead of real users, they must outbid them on every transaction.

During congestion, priority fees rise from fractions of a cent to a few cents, making large-scale spam financially unsustainable. Legitimate users pay slightly more and continue, while spam traffic becomes too expensive to maintain.

Additional Protection: Candy Machine Bot Tax

Metaplex introduced a 0.01 SOL penalty on suspicious Candy Machine mint transactions (wrong timing, incorrect collection ID, etc.). This economic deterrent immediately stopped spam, botters lost 426 SOL in the first few days.

Why these solutions work together:

- QUIC filters spam at the network edge

- Stake-Weighted QoS caps packet flow based on stake, making large-scale abuse financially impossible

- Priority fees ensure legitimate users can always get included during congestion

The April 2024 v1.17.31 networking upgrade, discussed in the Pump.fun case study, further improved connection prioritization and significantly reduced dropped transactions.

The spam attacks were solved through protocol-level changes, QUIC, Stake-Weighted QoS, and Priority Fees made flooding the network economically impossible. But Solana faced a different type of failure: client bugs that affected nearly every validator simultaneously.

Problem 2: Client Bugs Halted the Network

Between December 2020 and February 2024, five separate client bugs caused network outages:

December 2020 – Turbine Block Propagation Bug (6 hours): A validator transmitted two different blocks for the same slot. Solana tracked blocks by slot number rather than hash, causing network partitions to misinterpret different blocks as identical. Validators couldn't repair or synchronize, preventing consensus.

June 2022 – Durable Nonce Bug (4.5 hours): A runtime bug allowed certain transactions to be processed twice. This created non-deterministic behavior—some validators accepted the block, others rejected it. More than one-third accepted it, preventing the two-thirds majority needed for consensus.

September 2022 – Duplicate Block Bug (8.5 hours): A validator's primary and backup nodes both activated simultaneously, producing different blocks at the same slot. A fork-choice bug prevented validators from switching to the heaviest fork when needed. Validators became stuck, unable to reach consensus.

February 2023 – Large Block Overwhelm (19 hours): A validator's custom shred-forwarding service malfunctioned, transmitting a block almost 150,000 shreds large—several orders of magnitude above normal. This overwhelmed deduplication filters, continuously reforwarding data across the network and saturating Turbine. Block propagation slowed to a crawl.

February 2024 – Infinite Recompile Loop (5 hours): Agave's JIT compiler had a bug causing certain legacy programs to endlessly recompile. When a transaction referencing one of these programs got packed into a block and propagated, every Agave validator hit the same loop and stalled. Since 95%+ of validators ran Agave, consensus failed.

The Root Problem

For most of 2020–2024, over 95% of Solana validators ran the same client software: Agave, written in Rust. When Agave had a bug, nearly every validator hit it simultaneously, dropping the network below the 67% consensus threshold needed to produce blocks.

The pattern was the same every time: one bug in Agave meant network-wide failure because nearly all validators ran identical code.

The Solution: Client Diversity

The permanent fix is running multiple independent validator clients, each built from scratch by different teams in different programming languages.

With three independent clients sharing roughly equal stake, a bug in one client affects only that client's validators. The other clients continue voting, keeping the network above 67% and avoiding a halt. One client bug no longer means network-wide failure.

Current Status

Live on mainnet (at time of writing):

Agave (Rust) – ~3% of stakeweight, maintained by Anza, battle-tested but single point of failure.

Frankendancer (C++/Rust hybrid) – ~25% of stakeweight, launched September 2024, combines Firedancer's C++ performance with the Agave runtime.

Jito-Solana (Rust fork) – ~72% of stakeweight, modified Agave with MEV features, reduces Agave dominance but shares most of the same codebase.

Coming late 2025: Firedancer (pure C++) – built completely from scratch by Jump Crypto in C++, different language/architecture/team, already tested above 1M TPS on testnet.

Why Firedancer Is Critical

Frankendancer and Jito-Solana help, but they still share significant code with Agave. Firedancer is written entirely independently in C++. The February 2024 infinite recompile loop that crashed Agave would not have affected Firedancer at all—completely different compiler, different architecture, different code.

Once Firedancer reaches 25–30% of mainnet stake (late 2025/early 2026), single client bugs mathematically cannot halt the network. Two completely independent clients would need to fail simultaneously with different bugs—an effectively impossible scenario.

Conclusion

Solana's transformation from frequent outages to 22+ months of 100% uptime came from solving problems at the protocol level.

Spam attacks are now economically impossible; flooding the network requires billions in staked SOL or unsustainable fee costs. Client bugs will no longer halt the entire network once multiple independent implementations share stake across validators.

The network that processed $38 billion in a single day is fundamentally different from the one that crashed under bot traffic in 2021. What broke has been fixed, and those failures can't happen again.