What are Solana's Execution Limits?

What are Solana’s Execution Limits?

TL;DR

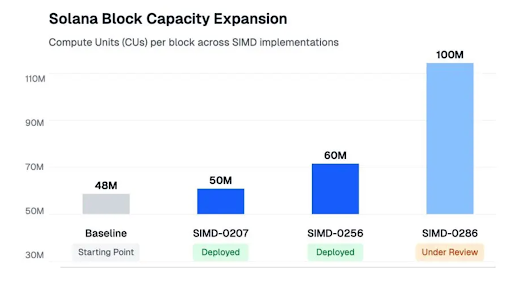

- Solana measures capacity by "work done" not "transaction count." Blocks increased from 48M → 60M Compute Units. A simple token transfer uses ~5,000 CUs. Complex DeFi transactions use way more. When the block hits 60M CUs, it's full.

- Account limits were holding things back. Busy accounts (like popular trading pools) could only use 12M CUs per block, even when the block had unused space. The fix: accounts can now use up to 40% of the block (24M CUs now, 40M when blocks reach 100M).

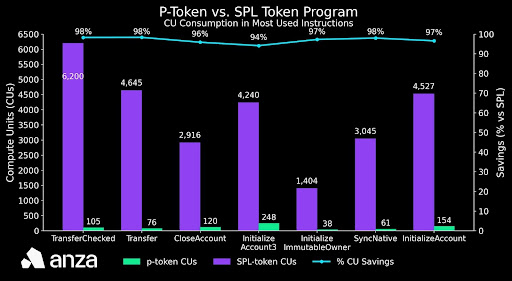

- The token program got way more efficient. Moving tokens around uses about 10% of Solana's capacity. P-Token rewrote it to use 95% less resources. Transfer cost dropped from 4,645 CUs → 76 CUs. This frees up massive space without changing block limits.

- XDP makes 100M CU blocks possible. Larger blocks mean validators need to send more data to each other. XDP speeds up packet handling by 100x, processing data right at the network card instead of going through slow software layers.

When the markets explode and panic spreads, who wins? The blockchain that doesn't crash.

On October 10th, sudden macroeconomic news triggered massive liquidations. Transaction demand on Solana spiked nearly 3x. Blocks ran at maximum capacity for hours. Slot times stayed stable, skip rates improved.

This article explains:

- How Solana measures execution work in Compute Units

- Why fixed block limits keep the network stable

- How stress testing validated the 60M CU limit under maximum load

- How Solana scales through better utilization and measured capacity increases

Understanding Compute Units and Block Limits

Throughput on Solana is governed by execution work, not raw transaction count. This is how Solana measures capacity - through Compute Units (CUs) that represent the actual cost of execution.

Compute Units reflect the actual cost of executing a transaction, including:

- Program instruction execution

- Account loading and locking

- Cross-program invocations (CPIs)

- Data serialization and runtime bookkeeping

A simple SPL token transfer typically consumes around 5,000 CUs, while more complex DeFi transactions can consume hundreds of thousands of CUs. Consumption depends on how much computation and state interaction they require.

The Block Compute Limit

Each Solana block has a fixed execution budget of 60 million CUs, increased earlier this year from 48 million CUs (a 25% increase in block-level compute capacity).

When the cumulative CU usage of transactions in a slot reaches this limit, the block becomes full, regardless of how many individual transactions it contains. This cap limits execution per slot, not how many transaction packets the network can ingest.

To illustrate the scale:

- 60M CUs ÷ 5,000 CUs ≈ 12,000 simple token transfers per block

- Additional 12M CUs unlocked capacity for roughly 2,500 extra transfers per block

In practice, real blocks contain a mix of transaction types, so CU limits are the meaningful measure of throughput (not transaction counts).

Why fixed block limits are essential

Solana targets a 400-ms slot time. Within this window, validators must execute the block, verify it, and propagate it to peers across the network. This timing constraint is non-negotiable, it is what keeps the entire validator set synchronized.

Without a CU cap, a leader could pack too much execution into a single block. The consequences would cascade:

- Block replay would spill past the slot boundary

- Validators would fall behind on processing

- Skipped slots would become frequent

- Network liveness would be at risk

Once replay consistently exceeds slot time, the network destabilizes.

The block limit acts as a hard safety rail, converting abstract execution time into a deterministic bound that every validator can rely on. Because all validators must deterministically agree on block validity, this limit must be enforced at the protocol level, not tuned locally by individual clients.

How 60 Million Block Limit was determined

The current 60M CU block limit is calibrated based on:

- Measured execution throughput on commodity validator hardware (not just high-end machines)

- Real-world network propagation capacity under production conditions

- Worst-case replay and verification latency across the validator set

Block limits are intentionally set below theoretical maximums to account for variance in hardware, load, and network conditions. The 60M value represents the highest limit that keeps block production, replay, and propagation safely within the slot budget for the entire network.

October 10th Stress Test Results

Think of it as stress-testing a bridge: engineers might calculate that it can safely carry 60 tons, but that capacity is only truly validated when the load is applied in practice.

On October 10th, a severe crypto market crash pushed Solana into a true worst-case execution regime. Triggered by sudden macroeconomic news, transaction demand surged and block compute limits were driven to their maximum.

What Actually Happened to Block Limits

During the peak of the event, leaders were consistently producing full 60 million CU blocks. Blocks remained saturated for hours while user transaction volume increased by ~2.7× over baseline.

At this point, the network was no longer constrained by demand or, it was operating directly at its execution ceiling.

The critical question was simple: what happens when Solana actually hits that ceiling?

https://x.com/jacobvcreech/status/1976883601607413775?s=20

The Answer: Stable Execution at the Limit

Even under sustained saturation, slot timing remained stable.

Average slot duration increased only from ~392 ms to ~395 ms, a difference of just 3 ms (the average eye blink takes around 200 ms), despite blocks being completely full.

This confirms that the 60M CU limit is correctly calibrated: it represents the maximum execution workload validators can process while staying within the 400 ms slot budget.

Liveness (the network was producing blocks continuously) was also maintained. The skip rate improved, falling to 0.05% compared to a 0.47% baseline. Elevated fees and MEV incentives encouraged validators to remain online and produce blocks reliably, even under stress.

- Before October 10th, the 60M CU limit was supported by benchmarks and testing.

- After October 10th, it was validated in production.

The network operated at full execution capacity for an extended period without:

- Slot time violations

- Widespread skipped blocks

- Loss of liveness

This real-world proof matters because in the future, Solana plans to increase block limits to 100 million CUs. Limits can only be raised safely once the existing ceiling has been shown to hold under extreme, adversarial conditions.

Solving the Per-Account Bottleneck

Proving that Solana can safely operate at the block execution limit does not mean all throughput constraints disappear. Once blocks are consistently full, the limiting factor shifts from total execution per block to how that execution is distributed across accounts.

Before recent upgrades, Solana enforced a fixed per-account compute cap: a single account could consume at most 12 million CUs per block, regardless of how much unused capacity remained elsewhere in the block.

This constraint was designed as a safety measure. It prevented any single account from monopolizing execution and ensured that worst-case serialized execution time stayed bounded. However, under real usage, it introduced a new inefficiency.

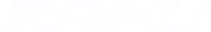

When Hot Accounts hit the ceiling

Many high-throughput applications rely on shared, heavily accessed accounts, such as:

- Order books and market state for DEXs

- Perpetual futures markets

- Global volume or fee accumulators

- Token mint and authority accounts

These “hot” accounts are touched by a large number of transactions every block. In practice, they would frequently hit the 12M CU per-account ceiling, even when the block itself still had significant unused compute capacity.

The result was an artificial bottleneck:

- The block could execute more work overall

- But execution touching a hot account would be throttled

- Additional valid transactions were deferred despite available block capacity

This meant Solana could be block-capacity rich but account-capacity constrained. The October 10th stress test showed that Solana can safely run at full block saturation. Once that ceiling is proven stable, the primary opportunity for higher throughput comes from better utilization of block space, not simply raising the block limit.

In other words:

- The block limit was no longer the problem

- Distribution of compute within the block was

Addressing this required changing how much compute a single account is allowed to consume relative to the block size itself.

What is SIMD-0306?

Solanna addressed this by making per-account limits adaptive. Instead of a fixed constant, a single account can now consume up to 40% of the block's total CU budget.

In practice:

- Current 60M CU blocks → 24M CUs per account

- Future 100M CU blocks → 40M CUs per account

This change allows hot accounts to scale in proportion to block capacity, improving throughput and block utilization without increasing worst-case execution risk. The limit automatically adjusts as block limits increase—no additional protocol changes needed.

Why 40%?

Setting the limit at 40% balances throughput gains with safety. It allows hot accounts to scale meaningfully while preserving space for unrelated transactions and keeping worst-case serialized execution time bounded.

Improving block utilization through adaptive per-account limits is one path to higher throughput. But there's another approach that's equally powerful: reducing how many Compute Units transactions actually need.

P-Token Solana: Making Token Operations 95% More Efficient

The SPL Token Program is Solana's most heavily used program, consuming approximately 10% of all block compute units. Because most transactions include multiple token program invocations (transferring tokens, minting, burning, updating accounts), even small efficiency improvements compound into massive capacity gains across the entire network.

What is P-Token?

P-Token is a complete rewrite of the SPL Token Program from the ground up. It's not a new token standard or a breaking change, it's a drop-in replacement that maintains full compatibility with existing tokens, wallets, and DeFi protocols.

Under the hood, P-Token eliminates unnecessary data copying, reduces memory usage, and streamlines execution paths. The result is dramatic efficiency gains across all token operations.

The Numbers

For the most commonly used operations:

- TransferChecked: 6,200 CUs → 105 CUs (98% reduction)

- Transfer: 4,645 CUs → 76 CUs (98% reduction)

- CloseAccount: 2,916 CUs → 120 CUs (96% reduction)

- InitializeAccount3: 4,240 CUs → 248 CUs (94% reduction)

- MintTo: 4,538 CUs → 119 CUs (97% reduction)

P-Token achieves 94-98% efficiency gains across all token operations.

Network-Wide Impact of P-Token

Migrating to P-Token will significantly reduce the block CUs currently consumed by the token program. Better composability since using the Token program instructions requires less CUs, and cheaper cross-program invocations for downstream programs.

When you optimize operations that consume 10% of all block compute to use significantly fewer resources, you've effectively increased capacity without touching block limits. And this compounds: a 100M CU block running P-Token has dramatically more effective capacity than a 60M CU block running the old token program. Efficiency gains and capacity increases multiply rather than add.

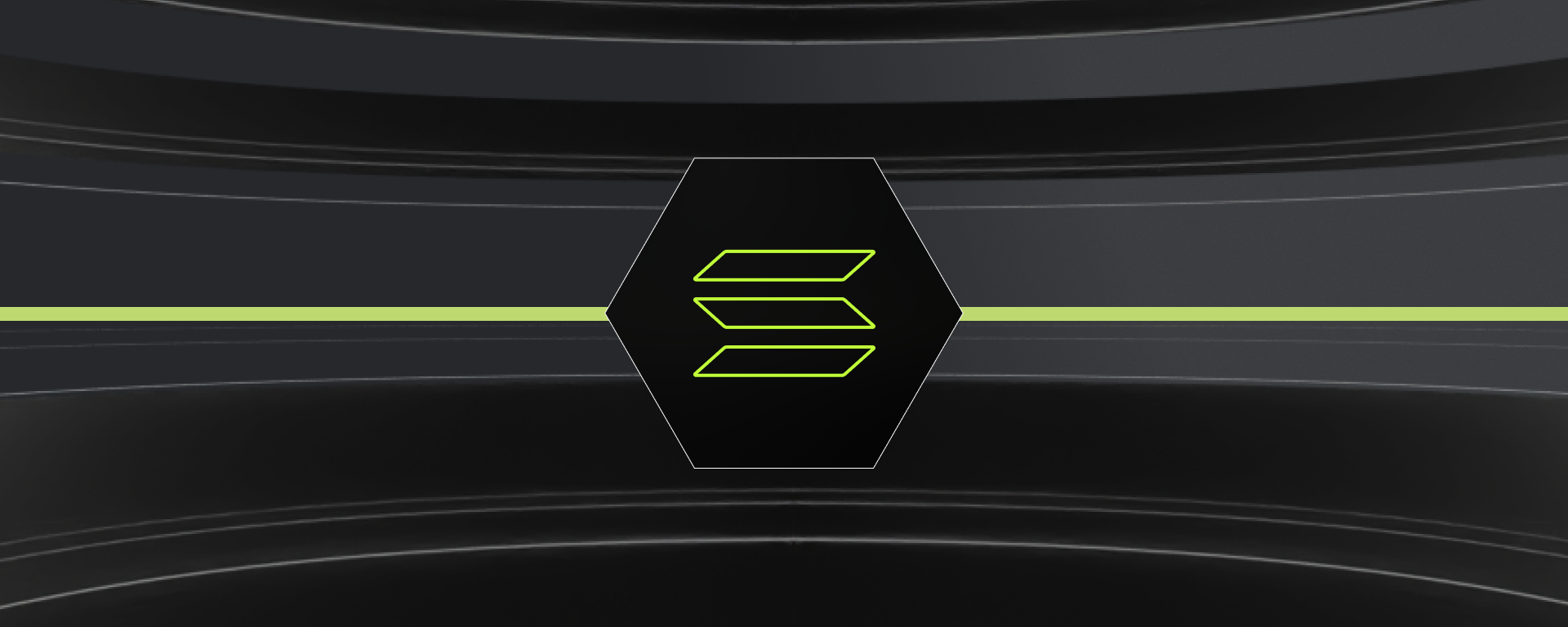

The next major upgrade increases block capacity from 60M to 100M CUs, a 66% jump. But scaling execution capacity reveals a different constraint: network propagation.

Why Propagation Becomes the Bottleneck

When a validator produces a block, it needs to send that data to ~200 other validators. The block gets split into small chunks called "shreds" that are transmitted across the network.

At 60M CUs, large validators already send 150,000 packets per second. At 100M CUs, this increases further. The question becomes: can validators reliably send and receive that many packets within the 400ms slot time?

If validators can't receive shreds fast enough, they can't process the block. This causes skipped slots and threatens the network's ability to stay synchronized.

Execution isn't the problem anymore, propagation is.

XDP Solana: Enabling 100M Compute Unit Blocks

XDP (eXpress Data Path) makes network packet handling dramatically faster.

Traditional packet processing on Linux involves the kernel moving data through multiple layers, copying it between memory locations, switching execution contexts, adding overhead. XDP cuts through this by handling packets right at the network card, before they hit the kernel's slow path.

Result: packet processing becomes up to 100x faster. Validators can send and receive the higher packet volumes that 100M CU blocks require without hitting the propagation ceiling.

XDP deployed in Agave 2.3.8 and became standard in Agave 3.1. The infrastructure upgrade is already live.

What Stays Fixed

Two other block limits remain unchanged:

- Vote CUs: 36M per block

- Account data growth: 100 MB per block

These serve different purposes. The vote CU limit prevents the network from being overwhelmed by consensus votes, ensuring voting overhead doesn't crowd out regular transactions. The account data size limit prevents any single block from creating excessive state growth, which would increase storage requirements and slow down validators over time.

Solana's Scaling Roadmap: 48M → 60M → 100M CUs

From 48M to 60M to 100M CUs. From fixed account limits to adaptive scaling. From 95% token program inefficiency to P-Token optimization. Each upgrade builds on validated infrastructure, compounding capacity gains while maintaining the 400ms slot time that keeps the network synchronized. That's how Solana processes more transactions than every other blockchain combined.